Terrain-Adaptive Control for Mobile Quadrupeds Using Elevation Maps

Project information

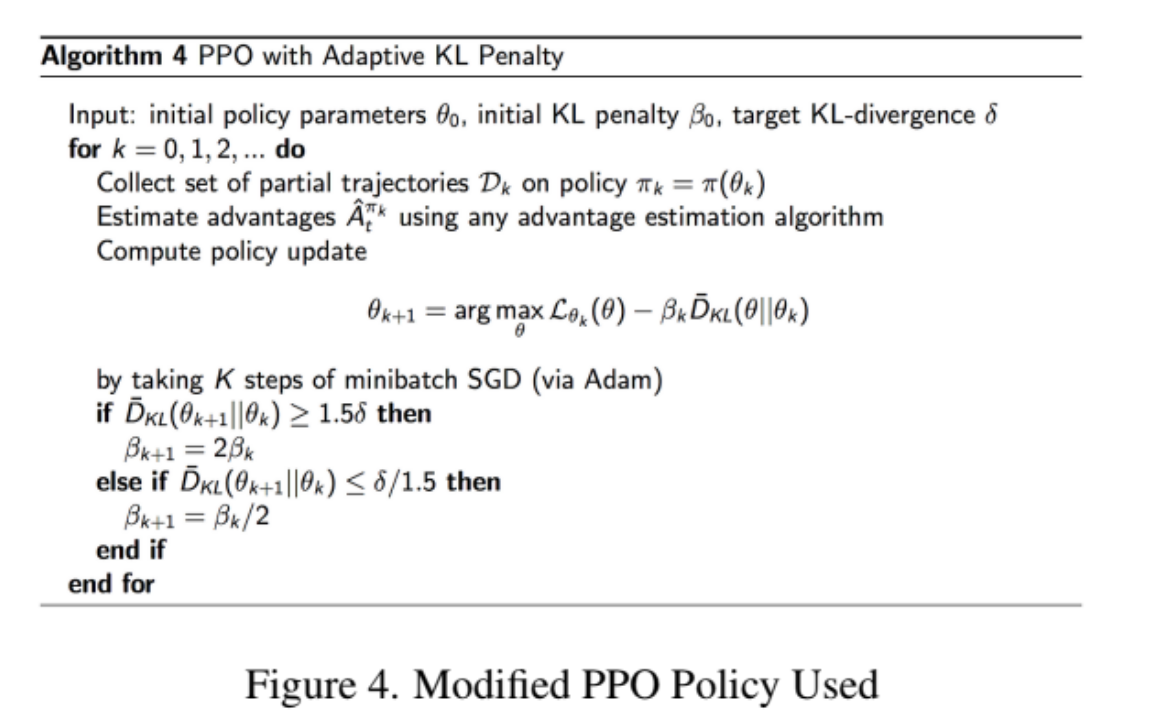

- Enhanced control policies for outdoor robots by integrating local elevation maps, utilizing Proximal Policy Optimization (PPO) to stabilize Unitree A1's gait on complex terrains.

- Collected real-world data to adjust reward functions and retrain deep reinforcement learning policies, accelerating adaptability through parallel training of thousands of simulated robots.

- Trained a policy for navigating complex natural terrains using base-heading and linear-velocity commands, employing a curriculum that adapts terrain difficulty without manual tuning.

- Used proprioceptive measurements to compute a weighted reward for velocity command tracking, optimizing for smoother motion, longer steps, and minimizing collisions, joint accelerations, torques, and action rates while maximizing foot air-time.

- Project Report: Link